Visual Diagnostics for Constrained Optimisation with Application to Guided Tours

H. Sherry Zhang Monash

Dianne Cook Monash

Ursula Laa BOKU

Nicolas Langrené CSIRO Data61

Patricia Menéndez Monash

6th Jul 2021

- Hi everyone,

- my name is Sherry Zhang, a PhD student from Monash University in Australia

- Today I will be talking about ...

- This is a work supervised by

Motivation

The work also reveals inadequacies in the tour optimization algorithm, that may benefit from newly developed techniques and software tools. (Laa & Cook, 2020)

- For simulated data, the optimisers

- often failed at finding the expected maxima, or

- would only get close but not reach the maxima

- For noisy index functions it failed completely

- project, motivated by ursula and Di's work, that apply guided tour to physics problems.

- this is what they write in the paper

- they find that ...

- on the right is an example where

- the optimiser could have finished at a better position but it doesn't

How to solve this problem?

To understand where the optimisers were failing, ideally we need to visualise the space and the paths that the optimisers take through the space.

How to solve this problem?

To understand where the optimisers were failing, ideally we need to visualise the space and the paths that the optimisers take through the space.

- the space is the set of all d-dimensional projections of p-dimensional space.

intro:

- This is an interesting visualisation problem because

What is projection pursuit?

Data: Xn×p Basis: Ap×d

Projection: Y=X⋅A

Index function: f:Rn×d↦R

holes index:

∝1−1n∑ni=1exp(−12yiy′i)

density ↑, index value ↑

optimisation:

argmaxA∈Af(X⋅A) s.t. A′A=Id

Now I will introduce some background information on

- what is a guided tour

- what is the optimisation problem and

- what are the optimisers that we have

To understand guided tour, we first need to talk about projection pursuit

- we denote the data as a matrix of dimension ....

- Projection basis A, of dimension ..., characterises the direction from which the data get projected;

- projection

- Index function: maps the projection from an ... dimension space to a scalar

- Throughout this presentation until the case study in the end, we will be using an index function called holes index

- will not show the full formula here, but it is proportional to 1 - something that looks like a standardised normal density

- on the right I have calculated the index value of the holes index for different 1D projections and we can observe that

- for those projections that have high density regions, for examples, ..., it has an higher index value

- The optimisation we have is to: max the index function, subject to orthonormality constraint

Three random search optimisers

- Creeping random search (CRS):

- randomly samples a basis and evaluates its index value

- accepted if it has a higher index value, discarded if lower

- Simulated annealing (SA):

- a second chance to be accepted with probability

P=min{exp[−∣Icur−Il∣T(l)],1}where I(⋅) is the index value, T(l)=T0log(l+1), l is the # of eval (in the current iter).

- As l increases, T(l) decreases, and the probability for accepting an inferior basis decreases

- a second chance to be accepted with probability

P=min{exp[−∣Icur−Il∣T(l)],1}

To solve this optimisation problem, we have three optimisers. All of which are random search algorithms

creeping random search:

- that satisfies the orthonormality constraint

- the basis, accept

simulated annealing:

- follows the same as CRS in sampling and accepting better bases, but

- it has a different design for bases that have lower index value. These bases have

- that is to say, it is less likely to accept a worse basis after more bases have been evaluated

Three random search optimisers

(Cont.)

- Pseudo derivative (PD):

- randomly sample 5 directions close to the current and pick the most promising direction

- search along a straight line (on the sphere) for the best candidate

- same acceptance rule as CRS

Pseudo derivative:

- flavour, gradient a'scent, as it first find a promising direction and then compute a step size

- we don't use derivative here, hard to operate on a matrix. Instead,

- PD then uses the same acceptance rule as CRS

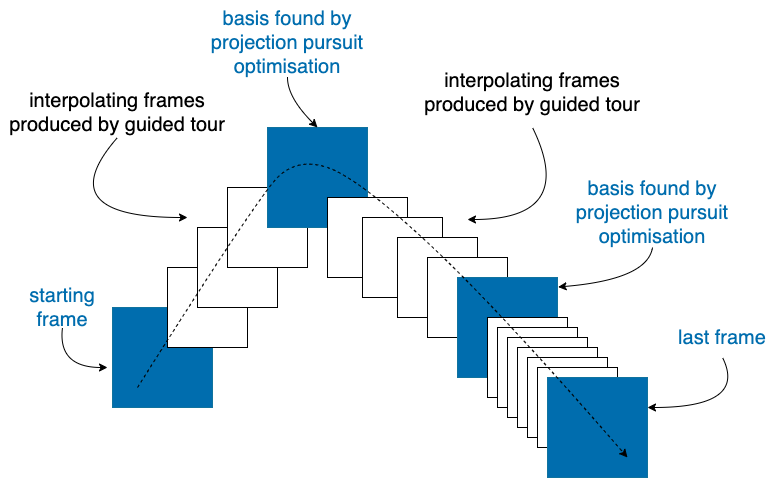

Projection pursuit with guided tour

- projection pursuit maximises the index function to iteratively find better basis/ projection (blue frames)

- guided tour chains these projections together through interpolation (white frames) and produces an smooth animation (see next slide )

This illustration shows how projection pursuit and guided tour works together

Those are shown in the blue frames

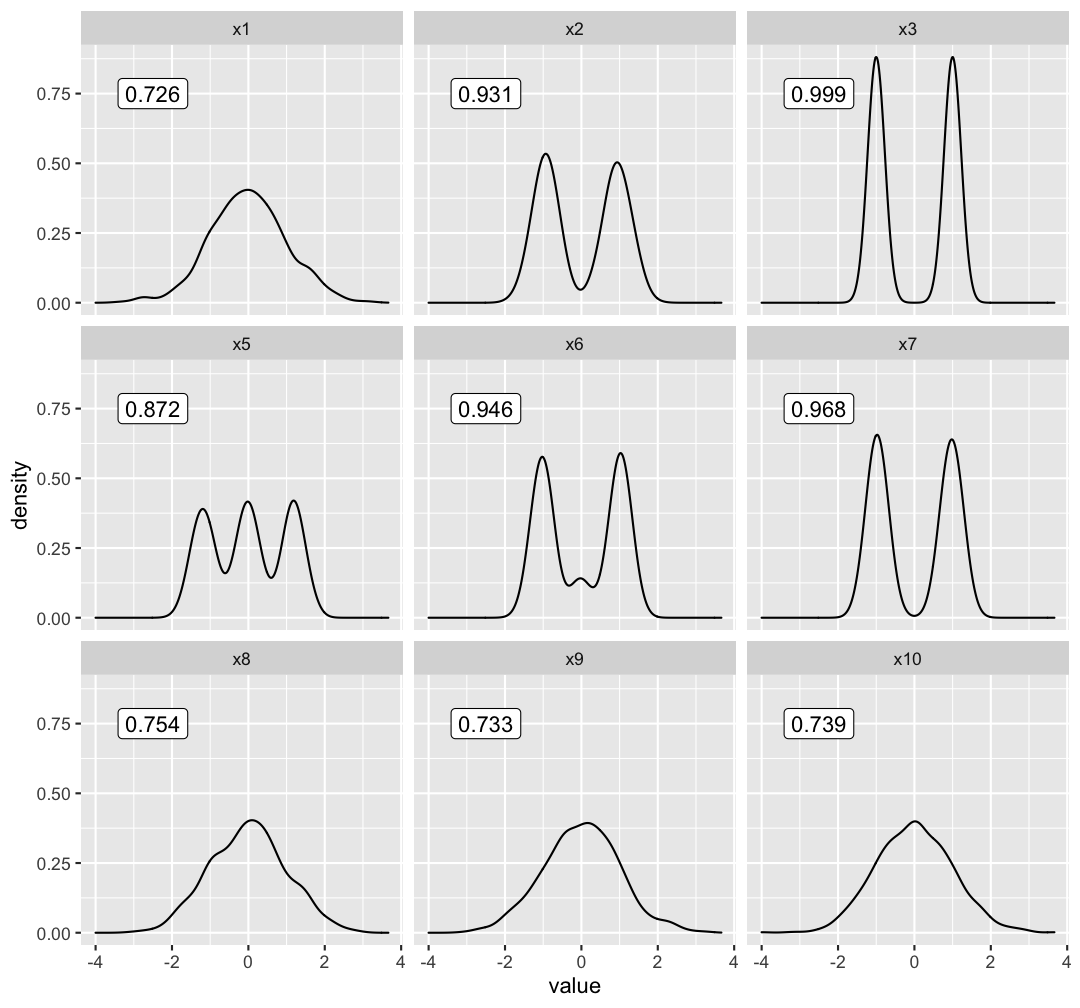

An example

1D projection: histogram

5 simulated variables

- x2 is mixture normal 0.5N(−3,1)+0.5N(3,1)

- others are random normal

We expect:

- x2 has weight close to 1

- others are close to 0

Here is how the animation looks like:

- We use histogram to display 1D projection

- The data include 5 variables, X2 is a mixture normal and others are random normal

- We expect: X2 to have a weight close to 1 and others close to 0

- In this simple example, the optimiser works well to find the optimum

- but this is not the case for all the problems, as we see in the literature

- so we need some visual tools to help us diagnose where things go wrong

- A side note here: this will also be the data that we used in later examples and we are always aiming to find x2 in this dataset

Ferrn package

Four functions to explore the trace and space of the optimisers:

explore_trace_search()explore_trace_interp()explore_space_pca()explore_space_tour()

Botanical theme:

scale_color_continuous_botanical()scale_color_discrete_botanical()

To produce plots:

<data> %>% <explore_*>() %>% <scale_*>()

* The syntax to adjust color in explore_space_tour() is slightly different

- This work leads to an R package, ferrn, where we create 4 diagnostic plots. Two for exploring the trace and two for space

- There is also a botanical theme for scale the color

- To produce a plot, you need to pipe the data collected from the optimisation into one of the explore functions and make color adjustment using the botanical palette

- Due to the time limit of this talk, I will go through the two space plots here and later you will have the chance to see the trace plot

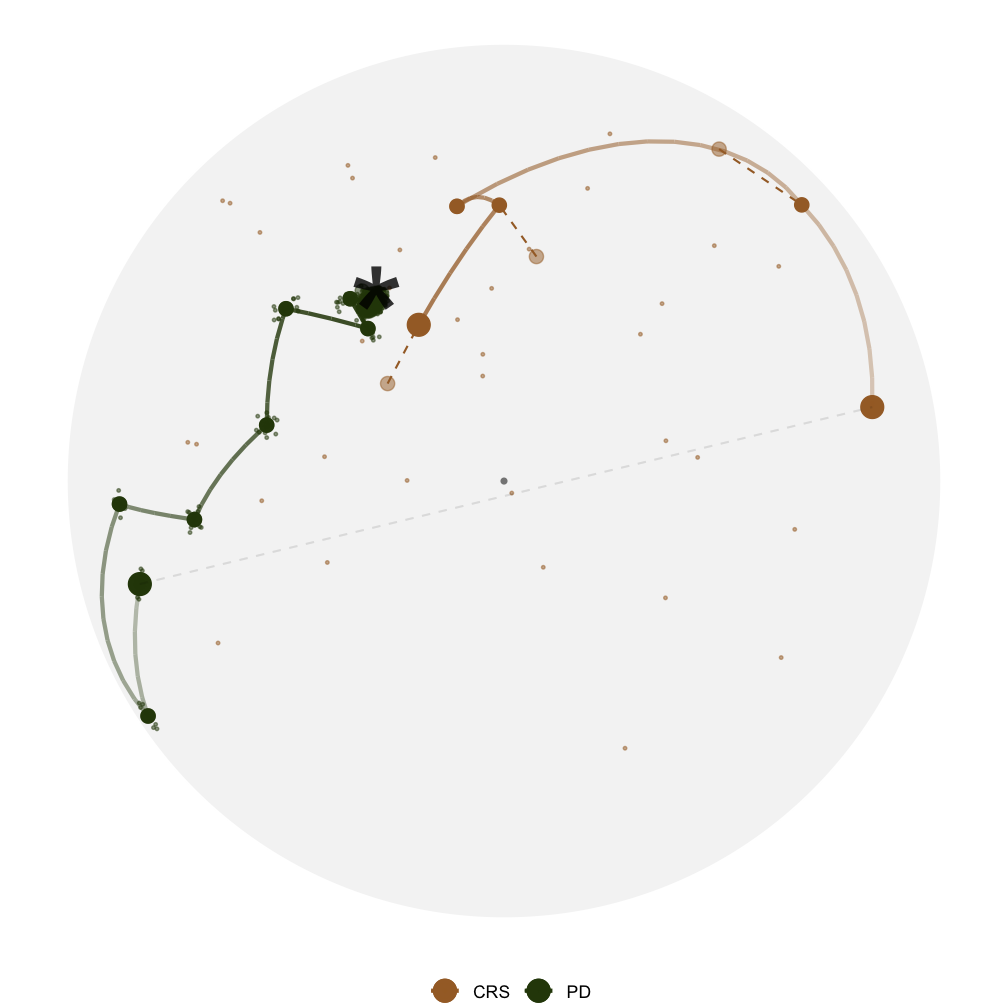

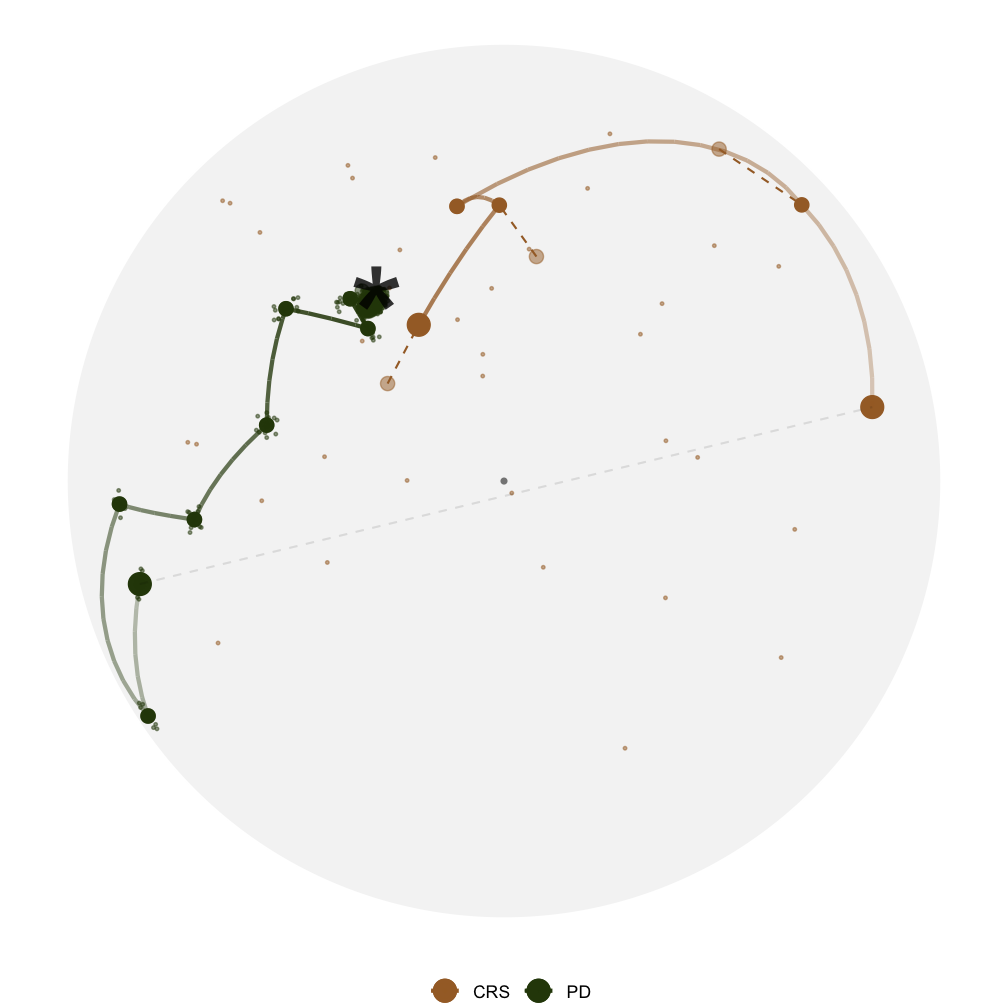

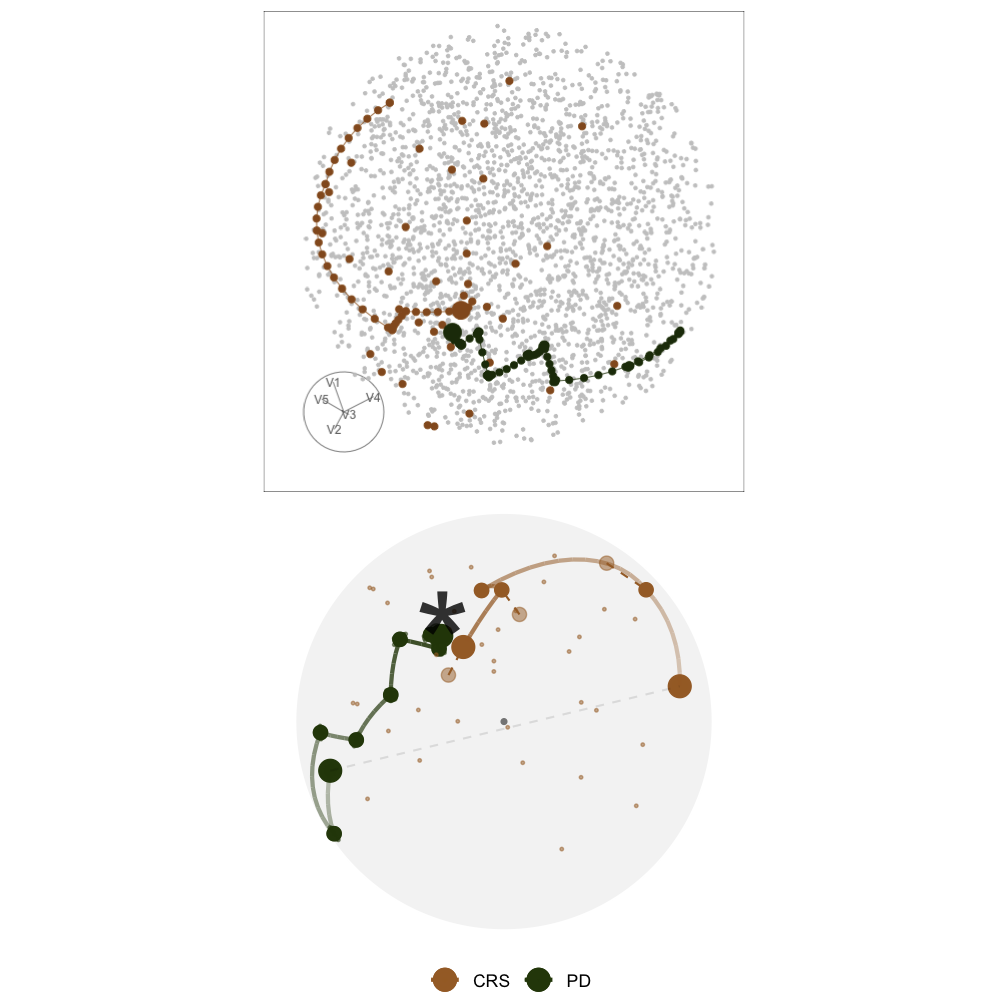

explore_space_pca()

This is the first space plot

First I'm going to tell you how this plot is made and then what we can learn from it

- We have all the bases evaluated in the optimisation - these are the coloured dots

- we also have random bases sampled from the 5D space, these are used to draw the space

- we then perform PCA on these two sets of bases and take the first two PCs to create this plot

In this plot

- The star is the theoretical best basis, corresponds to 1 in x2 and 0 in others

- we have two paths, CRS in brown and PD in green

- CRS evaluates random points in the space so you can see dots everywhere

- PD evaluates 5 directions locally, so you can see dots scattered around an accepted basis

- The paths here are the interpolation between bases accepted by projection pursuit

This plot tells us that ... and it helps us to ...

we can also make an animated version of this plot

- here we can see clearer where each optimiser starts

- an additional info, learn

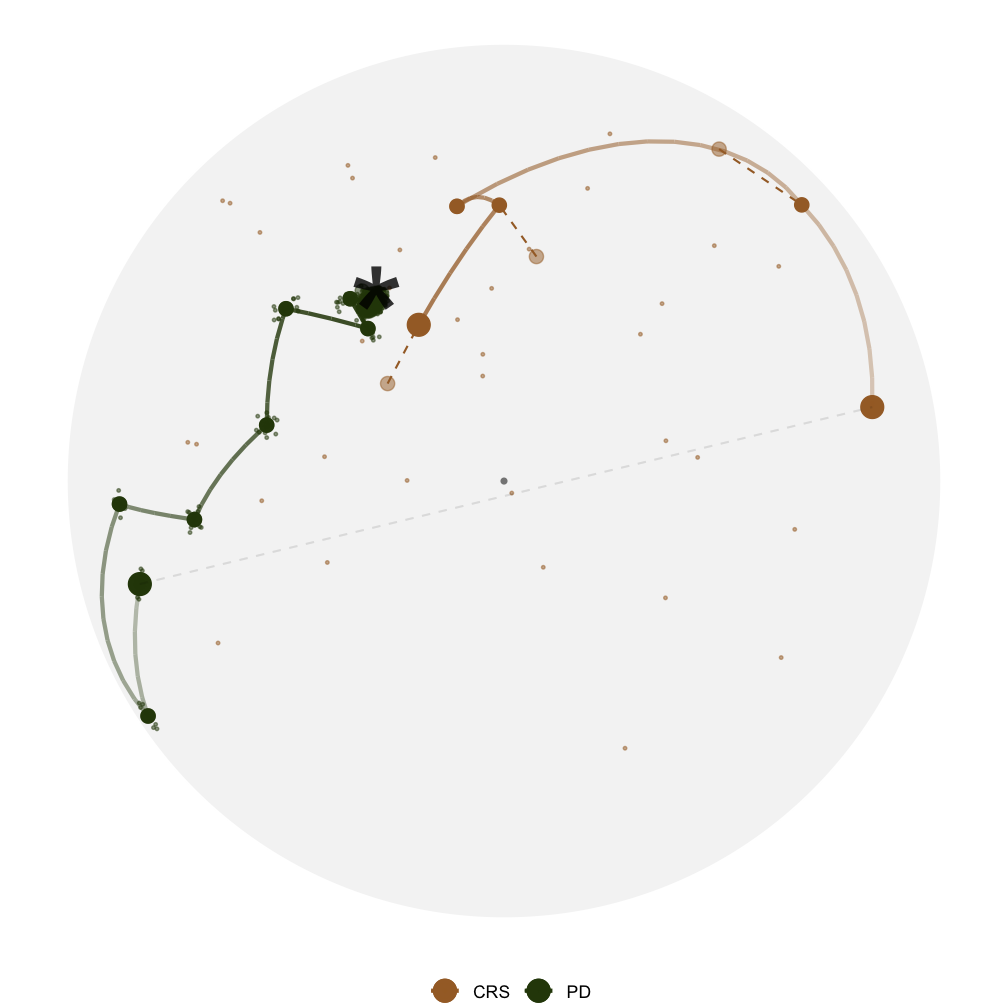

explore_space_pca()

- Both optimisers find the optimum but PD gets closer

- Visually understand how the optimisers work

This is the first space plot

First I'm going to tell you how this plot is made and then what we can learn from it

- We have all the bases evaluated in the optimisation - these are the coloured dots

- we also have random bases sampled from the 5D space, these are used to draw the space

- we then perform PCA on these two sets of bases and take the first two PCs to create this plot

In this plot

- The star is the theoretical best basis, corresponds to 1 in x2 and 0 in others

- we have two paths, CRS in brown and PD in green

- CRS evaluates random points in the space so you can see dots everywhere

- PD evaluates 5 directions locally, so you can see dots scattered around an accepted basis

- The paths here are the interpolation between bases accepted by projection pursuit

This plot tells us that ... and it helps us to ...

we can also make an animated version of this plot

- here we can see clearer where each optimiser starts

- an additional info, learn

explore_space_pca()

- Both optimisers find the optimum but PD gets closer

- Visually understand how the optimisers work

This is the first space plot

First I'm going to tell you how this plot is made and then what we can learn from it

- We have all the bases evaluated in the optimisation - these are the coloured dots

- we also have random bases sampled from the 5D space, these are used to draw the space

- we then perform PCA on these two sets of bases and take the first two PCs to create this plot

In this plot

- The star is the theoretical best basis, corresponds to 1 in x2 and 0 in others

- we have two paths, CRS in brown and PD in green

- CRS evaluates random points in the space so you can see dots everywhere

- PD evaluates 5 directions locally, so you can see dots scattered around an accepted basis

- The paths here are the interpolation between bases accepted by projection pursuit

This plot tells us that ... and it helps us to ...

we can also make an animated version of this plot

- here we can see clearer where each optimiser starts

- an additional info, learn

explore_space_pca()

- Both optimisers find the optimum but PD gets closer

- Visually understand how the optimisers work

CRS finds the optimum faster!

This is the first space plot

First I'm going to tell you how this plot is made and then what we can learn from it

- We have all the bases evaluated in the optimisation - these are the coloured dots

- we also have random bases sampled from the 5D space, these are used to draw the space

- we then perform PCA on these two sets of bases and take the first two PCs to create this plot

In this plot

- The star is the theoretical best basis, corresponds to 1 in x2 and 0 in others

- we have two paths, CRS in brown and PD in green

- CRS evaluates random points in the space so you can see dots everywhere

- PD evaluates 5 directions locally, so you can see dots scattered around an accepted basis

- The paths here are the interpolation between bases accepted by projection pursuit

This plot tells us that ... and it helps us to ...

we can also make an animated version of this plot

- here we can see clearer where each optimiser starts

- an additional info, learn

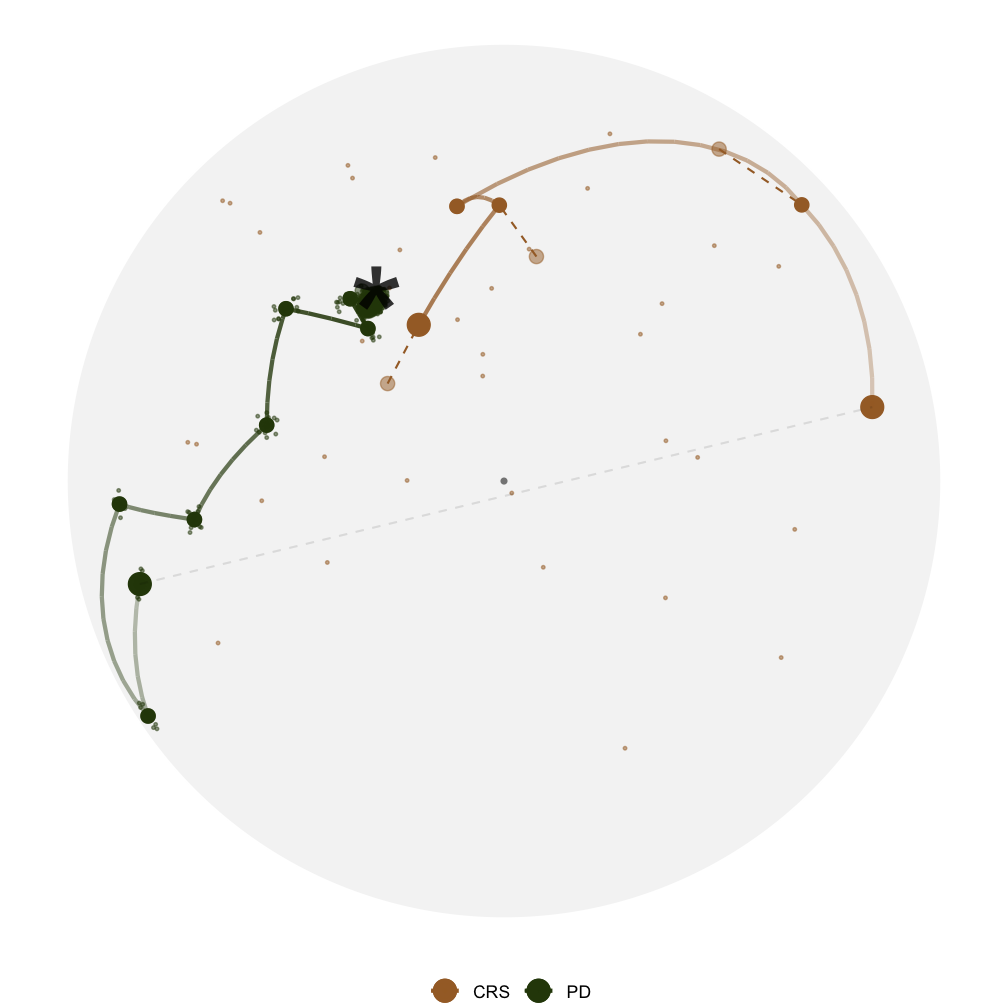

explore_space_tour()

The same two paths but on the 5D full space.

What I'm showing you now is the same two paths but on the full space. Let's watch this animation together:

- The full space is a 5D unit sphere since the orthonormality constraint requires the square of all the entries adds up to one

- Here is a frame in the animation that is most similar to the previous PCA view

What we learn here is that

- PCA, maximises variance, most spread, full space plot allows you to see the same path from different angles.

explore_space_tour()

The same two paths but on the 5D full space.

What I'm showing you now is the same two paths but on the full space. Let's watch this animation together:

- The full space is a 5D unit sphere since the orthonormality constraint requires the square of all the entries adds up to one

- Here is a frame in the animation that is most similar to the previous PCA view

What we learn here is that

- PCA, maximises variance, most spread, full space plot allows you to see the same path from different angles.

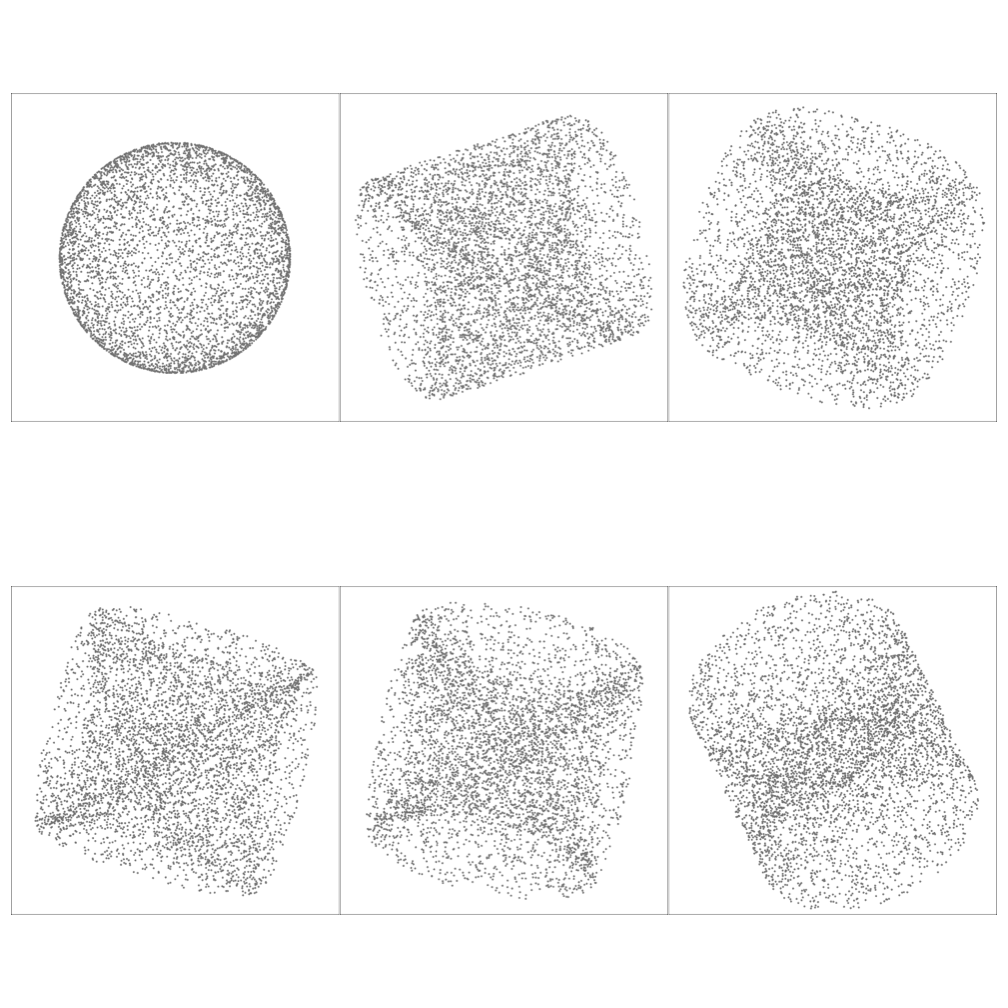

2D basis space

The basis space is no longer a sphere, but a torus!

Basis Ap×d where d=2

- similarly we can generate random 2D bases and animate it with 2D path embeded

- Here I have generated the 2D basis space and captured some frames from the animation

- This time,

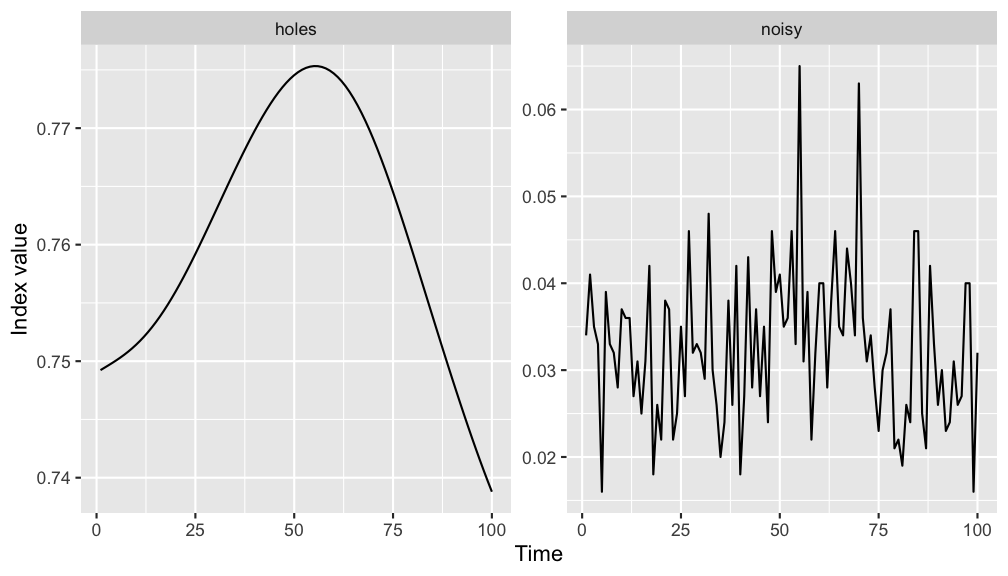

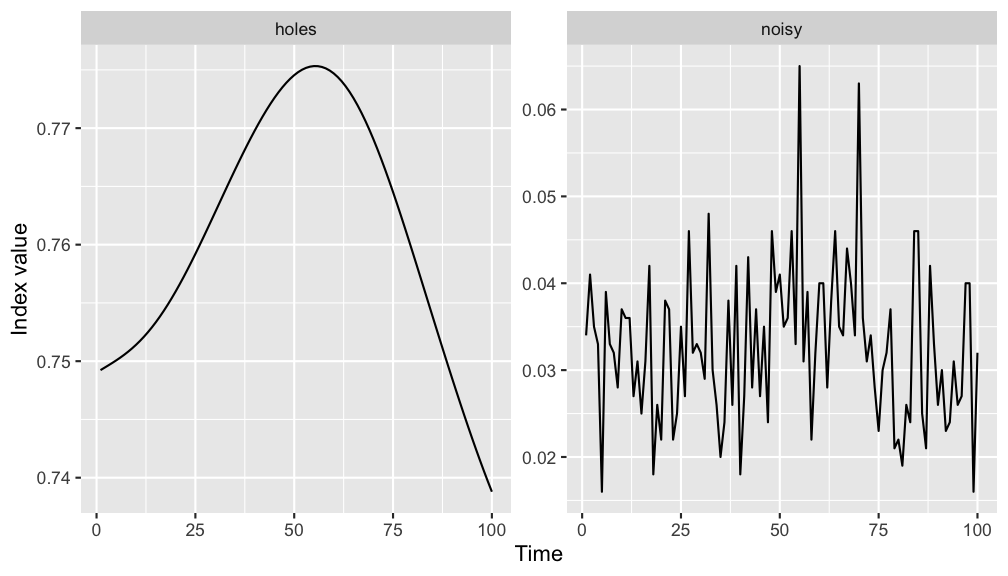

Case study: optimising a noisy index

Which optimiser(s) work and which didn't?

New index: I(n)=max[FY(n)−FN(n)]

where F.(n) is the ECDF function

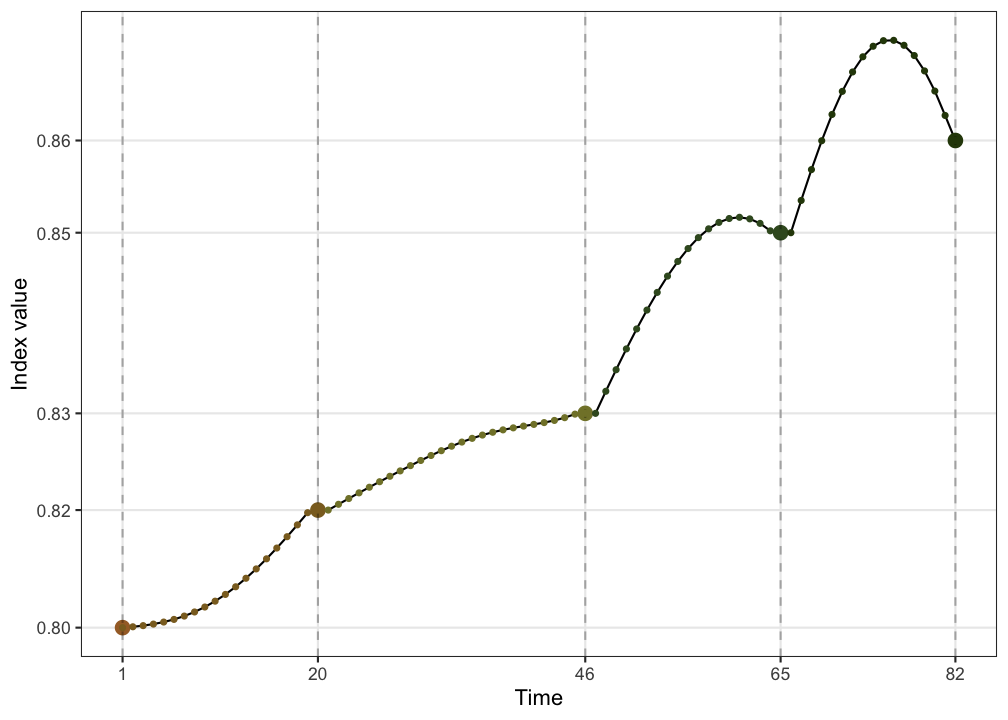

Now I'm presenting a case study on how visual diagnostics informs us about optmising a noisy index.

- The noisy index we use is based on the kolmogorove test, defined here.

- The plot on the left shows how the index value changes when interpolating between two random bases

- What I do is to generate two random bases in the 5D space and ask guided tour to interpolate 100 frames in between. The index value of all the frames are calculated and arranged by its natural order to make this plot.

- what we see here is that, comparing to the holes index, the new index

1) has a different range, and

2) also it is non-smooth

- These add challenges to the optimisation

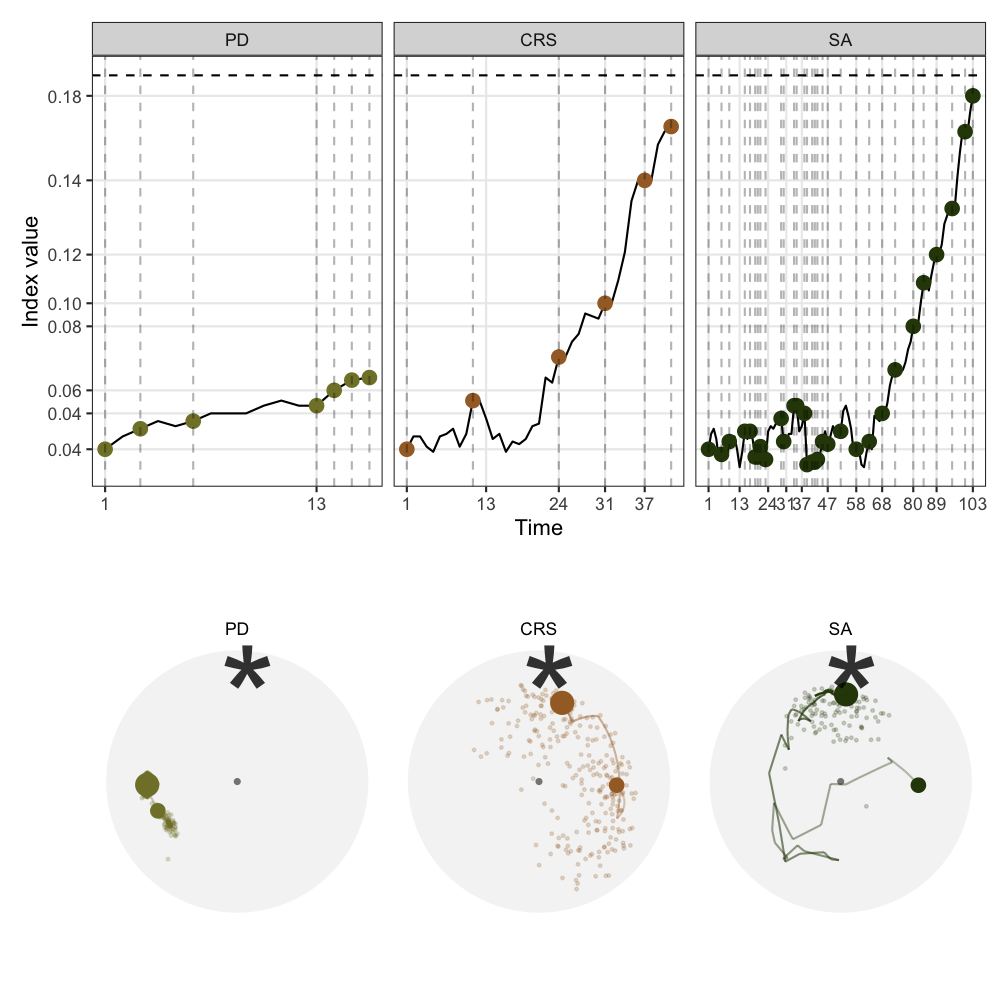

- On the right are the trace and space plot of the three optimisers I introduced earlier on the 5 variable dataset

- In the trace plot, the x-axis shows the natural order of time, y axis is the index value. The dashed horizontal line on the top is the index value of the theoretical best basis.

- we see here that the first optimiser, PD, fails to optimise the noisy index while CRS and SA are getting close to this theoretical best

- From the space plot, we can also see the success of CRS and SA but they are taking different approaches to find the final basis.

- CRS extensively evaluates points in the space before accepting a new basis to interpolate - thus we see lots of dots in the space but the interpolation path is short, while

- SA widely accepts new basis and performs interpolation and only starts to reject towards the end - thus we see a long interpolation path but few points being evaluated

Case study: optimising a noisy index

Which optimiser(s) work and which didn't?

New index: I(n)=max[FY(n)−FN(n)]

where F.(n) is the ECDF function

Now I'm presenting a case study on how visual diagnostics informs us about optmising a noisy index.

- The noisy index we use is based on the kolmogorove test, defined here.

- The plot on the left shows how the index value changes when interpolating between two random bases

- What I do is to generate two random bases in the 5D space and ask guided tour to interpolate 100 frames in between. The index value of all the frames are calculated and arranged by its natural order to make this plot.

- what we see here is that, comparing to the holes index, the new index

1) has a different range, and

2) also it is non-smooth

- These add challenges to the optimisation

- On the right are the trace and space plot of the three optimisers I introduced earlier on the 5 variable dataset

- In the trace plot, the x-axis shows the natural order of time, y axis is the index value. The dashed horizontal line on the top is the index value of the theoretical best basis.

- we see here that the first optimiser, PD, fails to optimise the noisy index while CRS and SA are getting close to this theoretical best

- From the space plot, we can also see the success of CRS and SA but they are taking different approaches to find the final basis.

- CRS extensively evaluates points in the space before accepting a new basis to interpolate - thus we see lots of dots in the space but the interpolation path is short, while

- SA widely accepts new basis and performs interpolation and only starts to reject towards the end - thus we see a long interpolation path but few points being evaluated

Summary

- We have developed specialised visualisation tools to diagnose optimisation in projection pursuit guided tour

- This allows us to understand algorithms that are not obvious to the end users

For tourr developers:

- Provides tools to test new optimisers and optimisation of new indexes

For algorithm developers:

- Can be seen as a work to enhance the interpretability of black-box optimsimation algorithms

Questions?

Package: huizezhang-sherry/ferrn

Slides: sherryzhang-user2021.netlify.app

H. Sherry Zhang

huizezhangsh https://huizezhangsh.netlify.com/

Supervised by Dianne Cook, Ursula Laa, Nicolas Langrené, and Patricia Menéndez